Eric S. Raymond has released doclifter 2.0, an open source tool that transcodes {n,t,g}roff documentation to DocBook. Version 2.0 adds support for man, mandoc, ms, me, and TkMan source documents as well. Raymond claims the "result is usable without further hand-hacking about 95% of the time." This release fixes bugs. Doclifter is written in Python, and requires Python 2.2a1. doclifter is published under the GPL.

Peter Jipsen has released ASCIIMathML 1.4.3, a JavaScript program that converts calculator-style ASCII math notation and some LaTeX formulas to Presentation MathML while a Web page loads. The resulting MathML can be displayed in Mozilla-based browsers and Internet Explorer 6 with MathPlayer.

Satimage-software has released XMLLib 2.0, an XML parser for AppleScript based on the Gnome Project's libxml. XMLLib supports DOM, XPath, and XSLT. Mac OS X 10.2.8 or later is required.

I'll be travelling for the holidays for the next week or so. Updates will likely be fairly slow until I return.

The OpenOffice Project has released OpenOffice 1.1.4, an open source office suite for Linux and Windows that saves all its files as zipped XML. I used the previous 1.0 version to write Effective XML. 1.1.4 is exclusively a bug fix release. OpenOffice is dual licensed under the LGPL and Sun Industry Standards Source License.

The XML Apache Project has released Xalan-C++ 1.9, an open source XSLT processor written in standard C++. Version 1.9 supports memory management, enables iterative processing, can pool all text node strings, and fixes assorted bugs.

Michael Kay has released Saxon 8.2, an implementation of XSLT 2.0, XPath 2.0, and XQuery in Java. Saxon 8.2 is published in two versions for both of which Java 1.4 is required. Saxon 8.2B is an open source product published under the Mozilla Public License 1.0 that "implements the 'basic' conformance level for XSLT 2.0 and XQuery." Saxon 8.2SA is a £250.00 payware version that "allows stylesheets and queries to import an XML Schema, to validate input and output trees against a schema, and to select elements and attributes based on their schema-defined type. Saxon-SA also incorporates a free-standard XML Schema validator. In addition Saxon-SA incorporates some advanced extensions not available in the Saxon-B product. These include a try/catch capability for catching dynamic errors, improved error diagnostics, support for higher-order functions, and additional facilities in XQuery including support for grouping, advanced regular expression analysis, and formatting of dates and numbers." Version 8.2 adds support for XOM, supports the JAXP 1.3 XPath and schema validation APIs, improves performance in a few areas, and is more backwards compatible with XSLT 1.0 stylesheets. Upgrades from 8.x are free.

I am pleased to announce what I expect is the final beta and release candidate of XOM 1.0, my open source dual streaming/tree-based API for processing XML with Java. XOM focuses on correctness, simplicity, and performance, in that order. This final (I hope) beta makes a number of improvements to performance in various areas of the API. Depending on the nature of your programs and documents, you should see speed-ups of somewhere between 0 and 20% compared to the previous beta. There are no over-the-covers changes in this release. Under-the-covers a few classes have undergone major rewrites, and a couple of non-public classes have been removed. All the unit tests (now over a thousand of them) still pass, but please do check this release out with your own code. If no problems are identified in this beta, I expect to officially release XOM 1.0 possibly as early as tomorrow, and certainly by the end of the year.

The W3C has released the final recommendation of XInclude 1.0.

There do not appear to be any significant changes since the proposed recommendation was published a couple of months ago.

Briefly, XInclude describes a means to build complex doucments out of simpler documents by replacing elements like <xi:include href="chapter1.xml"/> with the contents of the file they refer to.

For more details, I've written a Brief Introduction to XInclude.

There don't appear to be any fully conformant implementations yet.

However, XOM's XIncluder class implements all the required functionality, and passes all the tests that don't depend on optional features. Specifically XOM does not support unparsed entities, notations, or the xpointer XPointer scheme. The Gnome Project's

libxml also does a pretty good job with XInclude, and does support the xpointer scheme, though it doesn't handle all the edge cases quite as rigorously as XOM does. I've also written XInclude engines for DOM, JDOM, and SAX. However, these are much buggier and more incomplete than the XOM version. Some improvements have been made in CVS since the last milestone drop. However, they still flunk lots of the test cases, and may even get stuck in infinite loops or otherwise die horrible deaths when faced with relatively complex operations.

The Apache XML Project has released XML Security v1.2, an implementation of security related XML standards including Canonical XML, XML Encryption, and XML Signature Syntax and Processing. A compatible Java Cryptography Extension provider is required. Version 1.2 improves performance and offers "Easier JCE integration".

![]() The Mozilla Project has released Mozilla 1.7.5. This release improves IE compatibility with non-standards compliant sites,

and adds NPRuntime support. "NPRuntime is an extension to the Netscape Plugin API that was developed in cooperation with Apple, Opera, and a group of plugin vendors." More importantly,

this release fixes over three hundred assorted bugs. Sadly none of them seem to be ones that have been bedeviling me.

This release isn't too critical, but given the large number of fixes you should probably upgrade when you get a minute. Most of these fixes will be rolled into Firefox 1.1 sometime next year.

The Mozilla Project has released Mozilla 1.7.5. This release improves IE compatibility with non-standards compliant sites,

and adds NPRuntime support. "NPRuntime is an extension to the Netscape Plugin API that was developed in cooperation with Apple, Opera, and a group of plugin vendors." More importantly,

this release fixes over three hundred assorted bugs. Sadly none of them seem to be ones that have been bedeviling me.

This release isn't too critical, but given the large number of fixes you should probably upgrade when you get a minute. Most of these fixes will be rolled into Firefox 1.1 sometime next year.

The W3C XSL Working Group has published the second working draft of Extensible Stylesheet Language (XSL) Version 1.1. Despite the more generic name, this actually only covers XSL Formatting Objects, not XSL Transformations. New features in 1.1 include:

- Multiple flows

- Change marks

- Back of the book indexing

- Bookmarks

- Markers in tables

- fo:page-number-citation-last.

- fo:page-sequence-wrapper

- clear and float inside and outside

- prefixes and suffixes for page numbers

The W3C Technical Architecture Group (TAG) has published Architecture of the World Wide Web, First Edition. Quoting from the abstract:

The World Wide Web uses relatively simple technologies with sufficient scalability, efficiency and utility that they have resulted in a remarkable information space of interrelated resources, growing across languages, cultures, and media. In an effort to preserve these properties of the information space as the technologies evolve, this architecture document discusses the core design components of the Web. They are identification of resources, representation of resource state, and the protocols that support the interaction between agents and resources in the space. We relate core design components, constraints, and good practices to the principles and properties they support.

It's pretty good stuff overall. Everyone working on the Web, the Semantic Web, or with XML or URIs should read it.

Jens Låås has released version 1.5.4 of xmlclitools, a set of four Linux command-line tools for searching, modifying, and formating XML data. The tools are designed to work in conjunction with standard utilities such as grep, sort, and shell scripts. Version 1.5.4 allows UTF-8 output from xmlgrep. They are published under the LGPL.

Rich Salz and Dave Orchard have written a proposal for a URN scheme for

XML QNames. Basically they propose that a qualified name such as

xsl:template could be written as urn:qname:xsl:template:http://www.w3.org/1999/XSL/Transform. The default namespace can be handled by omitting the prefix. For examle the XHTML p element would be urn:qname::p:http://www.w3.org/1999/xhtml. You can ignore the prefix by using an asterisk. For instance, urn:qname:*:rect:http://www.w3.org/2000/svg matches both svg:rect and rect in the default SVG namespace.

I've expressed a few technical and editorial quibbles with the current draft to the authors, mostly revolving around the distinctions between URI, IRI, and URI reference,

but nothing fundamental.

Overall this seems like a pretty solid idea.

I'm not sure exactly where this would be used, but it sounds like it ought to be useful somewhere.

The W3C Web Services Addressing Working Group has posted three new working drafts on the subject of, what else? web services addressing. Web Services Addressing - Core defines generic extensions to the Infoset for endpoint references and message addressing properties. Web Services Addressing - SOAP Binding and Web Services Addressing - WSDL Binding describe how the abstract properties defined in the core spec are implemented in SOAP and WSDL respectively.

The W3C Multimodal Interaction working group has posted the fourth public working draft of EMMA: Extensible MultiModal Annotation markup language. According to the abstract, this spec "provides details of an XML markup language for describing the interpretation of user input. Examples of interpretation of user input are a transcription into words of a raw signal, for instance derived from speech, pen or keystroke input, a set of attribute/value pairs describing their meaning, or a set of attribute/value pairs describing a gesture. The interpretation of the user's input is expected to be generated by signal interpretation processes, such as speech and ink recognition, semantic interpreters, and other types of processors for use by components that act on the user's inputs such as interaction managers."

Sleepycat Software has released Berkeley DB XML 2.0.7, an open source "application-specific, embedded data manager for native XML data" based on Berkeley DB. It supports the July working drafts of XQuery 1.0 and XPath 2.0. It includes C++, Java, Perl, Python, TCL and PHP APIs. This is the first public release in the 2.0 series.

Benjamin Pasero has posted the first release candidate of RSSOwl 1.0, an open source RSS reader written in Java and based on the SWT toolkit. RSSOwl is the best open source RSS client I've seen written in Java. That said, it still doesn't feel right to me. Even ignoring various small bugs and user interface inconsistencies, news just doesn't flow in this client. The three-pane layout that separates the news item titles from each news item, and place the news item titles above the text of the news item doesn't work well for me.

The Mozilla Project has released Sage 1.3, an open source RSS plug-in for Firefox. Overall, I think this works better than RSSOwl. It also uses a three pane layout, but one of the panes is almost a full sized browser window, and includes complete news items, one after the other. This is almost good enough to actually use. However, it's got three major missing features:

- It does not respect the browser's font preferences. The default font is way too small for me, not is it my preferred font face for onscreen reading.

- It does not aggregate news items from different RSS feeds. (RSSOwl does.)

- It does not hide news items I've already read.

Both RSSOwl and Sage still feel like toys to me, not serious tools. Neither of these products seems capable of handling hundreds of blogs and thousands of news items. Oh, don't get me wrong. I'm sure you could subscribe to hudnreds, probably thousands, of feeds in either one of them; and it wouldn't crash or slow down. But the user interface is just not adequate for managing such large, ongoing, constantly updated information collections. So far I'm not sure if there is an RSS client that is capable of this. I don't know what a good RSS client will look like, but I'll know it when I see it, and so far I haven't seen it. RSS feeds are a new way of interacting with information, and we need some serious user interaction studies to understand how to properly design new user interfaces that fit. The old metaphors aren't working any more.

IBM's alphaWorks has released IBM Forms for Mobile Devices, "a Java-based, distributed software solution that, using XForms (a W3C standard for forms definition), enables pervasive mobile devices to access and complete business forms. This forms solution allows developers to quickly create, deploy and use forms based applications. This software demonstrates the ability of intermittently connected mobile devices to access and complete business forms that are stored locally on the mobile device. The completed forms are transferred to a server for additional processing when connectivity is available."

Bare Bones Software has released version 8.0.3 of BBEdit, my preferred text editor on the Mac. This release adds various small features and fixes a number of bugs. BBEdit is $179 payware. Upgrades from 8.0 are free. Upgrades from earlier versions are $49 for 7.0 owners and $59 for owners of earlier versions.

Ryan Tomayko has commenced work on Kid, "a simple Pythonic template language for XML based vocabularies. It was spawned as a result of a kinky love triangle between XSLT, TAL, and PHP."

The language is based on just five attributes: kid:repeat, kid:if, kid:content, kid:omit, and kid:replace; each of which contains a Python expression. Since this expression can point to externally defined functions, this is most of what you need. In addition there are attribute value templates similar to XSLT's, and <?kid?> processing instructions can embed code directly in the XML document.

I'm not sure I approve of the use of processing instructions in the language, but I'm not sure I don't either. Not having to escape XML-significant symbols like < and & in the embedded code is convenient.

Kid templates are compiled to Python byte-code and can be imported and invoked like normal Python code.

Kid templates generate SAX events and can be used with existing libraries that work along SAX pipelines.

Overall it looks like a fairly well-designed, well-thought out system that has clearly learned from the mistakes of gnarly systems like PHP, JSP, and ASP. Why am I not surprised to see this coming out of the Python community?

The Mozilla Project has posted

Camino 0.8.2, a

Mac OS X web browser based on the Gecko 1.7 rendering engine and the Quartz GUI toolkit.

It supports pretty much all the technologies that Mozilla does: HTML, XHTML, CSS, XML, XSLT, etc.

0.8.2 is a bug fix release.

Mac OS X 10.1.5 or later is required.

Kiyut has released Sketsa 2.2.1, a $29 payware SVG editor written in Java. Java 1.4.1 or later is required.

In anticipation of the upcoming release of the XInclude recommendation, I've posted a brief introduction to XInclude on The Cafes. This is an updated version of an article I've published in a couple of other venues over the last few years.

By the way, if you're using Internet Explorer and have had problems with the comment form on the Cafes, that has now been at least partially fixed. It still looks ugly, but at least it doesn't slide under the sidebar any more. The trick was setting width: 100%; on the

form element.

![]() The

W3C has released version 8.7 of

Amaya, their open source testbed web browser

and authoring tool for Solaris, Linux, Windows, and Mac OS X

that supports

HTML 4.01, XHTML 1.0, XHTML Basic, XHTML 1.1, HTTP 1.1, MathML 2.0, SVG, and

much of CSS 2. Besides bug fixes, there are a few new features in this release including non-breaking space and tabs are shown in the source view as ~ and », and

menu items to generate section numbers and tables of contents.

The

W3C has released version 8.7 of

Amaya, their open source testbed web browser

and authoring tool for Solaris, Linux, Windows, and Mac OS X

that supports

HTML 4.01, XHTML 1.0, XHTML Basic, XHTML 1.1, HTTP 1.1, MathML 2.0, SVG, and

much of CSS 2. Besides bug fixes, there are a few new features in this release including non-breaking space and tabs are shown in the source view as ~ and », and

menu items to generate section numbers and tables of contents.

The Mozilla Project has posted the fifth alpha of Mozilla 1.8.

New features in 1.8 include FTP uploads, improved junk mail filtering,

better Eudora import, and an increase in

the number of cookies that Mozilla can remember. It also makes various small user interface improvements, gives users the option to disable CSS globally or on a per-page basis,

and adds support for CSS quotes. Alpha 5 fixes a slew of bugs and enables support for CSS columns.

The W3C Authoring Tool Accessibility Guidelines Working Group has posted a working draft of Implementation Techniques for Authoring Tool Accessibility Guidelines 2.0. "This document provides non-normative information to authoring tool developers who wish to satisfy the checkpoints of "Authoring Tool Accessibility Guidelines 2.0" [ATAG20]. It includes suggested techniques, sample strategies in deployed tools, and references to other accessibility resources (such as platform-specific software accessibility guidelines) that provide additional information on how a tool may satisfy each checkpoint."

P & P Software has released XSLTdoc 1.0, a free-as-in-speech (GPL) Javadoc-like tool for XSLT stylesheets. Instead of using comments, it uses elements in the http://www.pnp-software.com/XSLTdoc namespace. These are top-level elements that appear before each documented top-level XSLT element. The processor is itself implemented in XSLT 2.0. The XSLT stylesheets are not complete onto themselves. A config file is also required, though mostly this just replaces what would be provided by command line arguments in javadoc. However, truly JavaDoc like documentation should be able to generated purely from the XSLT stylesheets themselves, without any extra files needing to be consulted.

The OpenOffice Project has posted the first release candidate of OpenOffice 1.1.4, an open source office suite for Linux and Windows that saves all its files as zipped XML. I used the previous 1.0 version to write Effective XML. 1.1.4 is exclusively a bug fix release. OpenOffice is dual licensed under the LGPL and Sun Industry Standards Source License.

The IETF has posted working drafts for five more URL schemes:

(Does anyone still use Prospero any more? For that matter, does anyone still use gopher?) These all document schemes that were originally documented in the soon to be historic RFC 1738. NNTP URLs are deprecated in favor of news. Otherwise, none of them make significant changes.

The IETF has posted a working draft of The file URI Scheme. "This document specifies the file Uniform Resource Identifier (URI) scheme that was originally specified in RFC 1738. The purpose of this document is to allow RFC 1738 to be moved to historic while keeping the information about the scheme on standards track." Sadly, this draft does not attempt to correct the numerous ambiguities and inconsistencies in both the orignal RFC or the practice of file URLs in software today.

The IETF has also posted another last call working draft of Internationalized Resource Identifiers (IRIs). "An IRI is a sequence of characters from the Universal Character Set (Unicode/ISO 10646). A mapping from IRIs to URIs is defined, which means that IRIs can be used instead of URIs where appropriate to identify resources." In other words this lets you write URLs that use non-ASCII characters such as http://www.libération.fr/. The non-ASCII characters would be converted to a genuine URI using hexadecimally escaped UTF-8. For instance, http://www.libération.fr/ becomes http://www.lib%C3%A9ration.fr/. There's also an alternative, more complicated syntax to be used when the DNS doesn't allow percent escaped domain names. However, the other parts of the IRI (fragment ID, path, scheme, etc.) always use percent escaping. The changes in this draft mostly focus on specifying different possible mechanisms for comparing IRIs for equality.

The W3C Web Services Internationalization Task Force has published the secopnd public working draft of Requirements for the Internationalization of Web Services. According to the intro,

A Web Service is a software application identified by a URI [RFC2396], whose interfaces and binding are capable of being defined, described and discovered by XML artifacts, and which supports direct interactions with other software applications using XML-based messages via Internet-based protocols. The full range of application functionality can be exposed in a Web service.

The W3C Internationalization Working Group, Web Services Task Force, was chartered to examine Web Services for internationalization issues. The result of this work is the Web Services Internationalization Usage Scenarios document [WSIUS]. Some of the scenarios in that document demonstrate that, in order to achieve worldwide usability, internationalization options must be exposed in a consistent way in the definitions, descriptions, messages, and discovery mechanisms that make up Web services.

According to the status section, "There were only very few changes since the last publication. The main change is the addition of requirement R007 about integration with the overall Web services architecture and existing technologies. The wording of the other requirements was changed to not favor solutions that are still under discussion. Text has been streamlined and references have been updated."

Michael Smith has posted version 1.67.2 of the DocBook XSL stylesheets. These support transforms to HTML, XHTML, and XSL-FO. This is mostly a bug fix release but does expand customizability in a few areas including tables and tables of content.

The W3C Multimodal Interaction Working Group has posted a working draft of the Dynamic Properties Framework. According to the abstract, "This document defines platform and language neutral interfaces that provide Web applications with access to a hierarchy of dynamic properties representing device capabilities, configurations, user preferences and environmental conditions."

RenderX has released version 4.1 of XEP, its payware XSL Formatting Objects to PDF and PostScript converter. XEP also supports part of Scalable Vector Graphics (SVG) 1.1. New features in 4.1 include embedding of Adobe Compact Font Format (CFF) fonts, improved memory management , and reworked algorithms for automatic table layout. The basic client is $299.95. The developer edition with an API is $999.95. The server version is $3999.95. Updates from 3.0 range from free to full-price depending on when you bought it.

The Cafes seems to be off and running. There were a few initial glitches that I have now cleaned up. There's some interesting discussion in the comments fora for On Iterators and Indexes and Overloading Int Considered Harmful. Today's project is to make the staging server work enough like the production server that I can use it for testing and debugging without affecting the production server. Yesterday I got stymied by a slight difference in how the PHP engines were configured. (The staging server didn't have libtidy support that the site relies on heavily.) I had planned to post a backlist article today, but instead I found myself forced to think about spam.

Colin Paul Adams has commenced work on Gestalt, an open source, non-schema aware XSLT 2.0 processor written in Eiffel. Gestalt is published under the Eiffel Forum License V2.0.

The W3C Quality Assurance (QA) Activity has published a revised working draft of the QA Framework: Specification Guidelines. Quoting from the abstract, "A lot of effort goes into writing a good specification. It takes more than knowledge of the technology to make a specification precise, implementable and testable. It takes planning, organization, and foresight about the technology and how it will be implemented and used. The goal of this document is to help W3C editors write better specifications, by making a specification easier to interpret without ambiguity and clearer as to what is required in order to conform. It focuses on how to define and specify conformance for a specification. Additionally, it addresses how a specification might allow variation among conforming implementations. The document is presented as a set of guidelines or requirements, supplemented with good practices, examples, and techniques."

Altsoft N.V. has released Xml2PDF 2.1, a $49 payware Windows program

for converting XSL Formatting Objects documents into PDF files.

Version 2.1 makes various optimizations and

adds support for support for patterns in SVG

and XML image embedding with data: URLs.

Lately I've noticed that outlets for article sized content are becoming fewer and farther between. I've got lots of things I'd like to write about at a length somewhat longer than a typical Cafe con Leche news item, but much shorter than a full book; so I decided to do something about it. Hence I am announcing The Cafes, a new site for content that falls somewhere in the large territory between a blog post and a book. The initial articles include:

The Cafes will not be updated as regularly as Cafe con Leche; just when I've got something I want to write about. It's going to focus more on How-Tos and technical material, and less on product announcements. The goal is to write more substantive material that will be valuable for a longer period of time. I do have an RSS feed for the site to announce the most recent articles, but my assumption is that most readers will find the site through search engines and links, when looking for information on a specific topic, not by checking in every day. I will be adding new articles at a rate of about one a day this week, as I've got quite a back log to plow through. Indeed the back log was one of the motivating factors for launching the site.

Also unlike Cafe au Lait/con Leche, the Cafes includes a place for reader comments on each article. I rolled my own comments system on top of PHP and MySQL because I really couldn't find an existing system that did what I wanted it to do. At the same time, since no one's done comments like this before, I pretty much had to code the system from scratch, so it's more than likely there are some bugs flitting around, waiting to be squashed. if you happen on any of the critters, please let me know.

Judging by my server logs, a few of you have found the site already. There's still a lot of work to be done, but I think it's ready to be opened to the public. Check it out, and let me know what you think. Please post any comments you have on the Welcome to the Cafes page. I think you'll like what you see. I'm very excited about The Cafes, and I think it's a going to be a very interesting and productive destination. Happy XML!

The W3C Authoring Tool Accessibility Guidelines Working Group has posted the last call working draft of Authoring Tool Accessibility Guidelines 2.0. "This specification provides guidelines for designing authoring tools that lower barriers to Web accessibility for people with disabilities. An authoring tool that conforms to these guidelines will promote accessibility by providing an accessible authoring interface to authors with disabilities as well as enabling, supporting, and promoting the production of accessible Web content by all authors."

The W3C Quality Assurance Working Group has published The QA Handbook, "a non-normative handbook about the process and operational aspects of certain quality assurance practices of W3C's Working Groups, with particular focus on testability and test topics. It is intended for Working Group chairs and team contacts. It aims to help them to avoid known pitfalls and benefit from experiences gathered from the W3C Working Groups themselves. It provides techniques, tools, and templates that should facilitate and accelerate their work."

The W3C Internationalization Working Group has published the proposed recommendation of Character Model for the World Wide Web 1.0: Fundamentals. "This Architectural Specification provides authors of specifications, software developers, and content developers with a common reference for interoperable text manipulation on the World Wide Web, building on the Universal Character Set, defined jointly by the Unicode Standard and ISO/IEC 10646. Topics addressed include use of the terms 'character', 'encoding' and 'string', a reference processing model, choice and identification of character encodings, character escaping, and string indexing."

This version spins out a new spec, Character Model for the World Wide Web 1.0: Resource Identifiers, which is in candidate recommendation. This spec basically says other specs should use IRIs everywhere, and should be careful to define when the conversion to URIs takes place.

IBM's developerWorks has published an article I wrote about RELAX NG with custom datatype libraries. This article explores one of the most powerful but little-known features of RELAX NG: the ability to define new simple data types using Java code. This enables one to check constraints like a number is prime, every left parenthesis is matched by a properly balanced right parenthesis, or the value of an SKU attribute matches the value of an SKU field in an external database. None of these constraints are expressible in the W3C XML Schema Language.

Ispras Modis has posted Sedna 0.3, an open source native XML database for Windows written in C++ and Scheme and published under the Apache License 2.0. This is not currently recommended for production. Sedna has partial support for XQuery and its own declarative update language.

The W3C SVG and CSS Working Groups have posted the second public working draft SVG's XML Binding Language (sXBL). In brief think of this as stylesheets on steroids. The goal is to be able to render any XML document by transforming it into SVG. This would allow the rendering of things that don't look remotely like text, such as MathML and MusicXML.

Stefan Champailler has posted DTDDoc 0.0.11, a JavaDoc like tool for creating HTML documentation of document type definitions from embedded DTD comments. This release adds a DTD tree browser, an entities index for each DTD, clickable element models, and autodetection of root elements. DTDDoc is published under an MIT license.

The W3C XML Protocol Working Group has published three proposed recommendations covering XOP, a MIME multipart envelope format for bundling XML documents with binary data:

- XML-binary Optimized Packaging "defines the XML-binary Optimized Packaging (XOP) convention, a means of more efficiently serializing XML Infosets (see [XMLInfoSet]) that have certain types of content. A XOP package is created by placing a serialization of the XML Infoset inside of an extensible packaging format (such a MIME Multipart/Related, see [RFC 2387]). Then, selected portions of its content that are base64-encoded binary data are extracted and re-encoded (i.e., the data is decoded from base64) and placed into the package. The locations of those selected portions are marked in the XML with a special element that links to the packaged data using URIs."

- SOAP Message Transmission Optimization Mechanism "describes an abstract feature and a concrete implementation of it for optimizing the transmission and/or wire format of SOAP messages. The concrete implementation relies on the [XOP] format for carrying SOAP messages."

- Resource Representation SOAP Header Block "describes the semantics and serialization of a SOAP header block for carrying resource representations in SOAP messages."

Basically this is another whack at the packaging problem: how to wrap up several documents including both XML and non-XML documents and transmit them in a single SOAP request or response. In brief, this proposes uses a MIME envelope to do that. This is all reasonable. I do question the wisdom, however, of pretending this is just another XML document. It's not. The working group wants to ship binary data like images in their native binary form, which is sensible. What I don't like is that the working group wants to take their non-XML, MIME based format and say that it's XML because you could theoretically translate the binary data into Base-64, reshuffle the parts, and come up with something that is an XML document, even though they don't expect anyone to actually do that.

Why is there this irresistible urge throughout the technology community to call everything XML, even when it clearly isn't and clearly shouldn't be? XML is very good for what it is, but it doesn't and shouldn't try to be all things to all people. Binary data is not something XML does well and not something it ever will do well. Render into binary what is binary, and render into XML what is text.

The W3C Web Content Accessibility Guidelines Working Group has posted five public working drafts covering various topics:

- Web Content Accessibility Guidelines 2.0

- CSS Techniques for WCAG 2.0

- General Techniques for WCAG 2.0

- HTML Techniques for WCAG 2.0

- Client-side Scripting Techniques for WCAG 2.0

These describe "design principles for creating accessible Web content. When these principles are ignored, individuals with disabilities may not be able to access the content at all, or they may be able to do so only with great difficulty. When these principles are employed, they also make Web content accessible to a variety of Web-enabled devices, such as phones, handheld devices, kiosks, network appliances. By making content accessible to a variety of devices, that content will also be accessible to people in a variety of situations."

There's some useful information in here. I knew most of this stuff already. but I did find a few new ideas. Frames can have titles, which I didn't know, but then I rarely if ever use frames. More practical for me is that I can put an abbr attribute on th elements to provide terse substitutes for header labels to be used for screen readers. Also, "Use the address element to define a page's author." I'd forgotten about that one, but I'll be adding it to my pages now. And I should probably be using an abbr element with a title attribute rather than spelling out "Java Specification Request (JSR)" every time somebody submits a new draft to the JCP. See? I used it already!

Jacob Roden has posted csv2xml, a simple open source (BSD license) command line utility for coonverting comma separated values files to XML.

The

XML 1.1 Bible is now available as an eBook in Adobe Reader format. Diesel eBooks

has it on sale for just $27.48. Amazon has lowered their price for the paper version to just $26.39, inlcuding free shipping. Bookpool is selling the paper version for $24.95, but you'll need to pay for shipping unless your total order exceeds $40.00.

Oleg Paraschenko has released TeXML 1.2, an XML vocabulary for TeX. The processor that transforms TeXML markup into TeX markup is written in Python, and thus should run on most modern platforms. The intended audience is developers who automatically generate TeX files. According to Paraschenko, "The main new feature is an automatic laying out of the generated LaTeX code. In fully automatic mode, the TeXML processor deletes redundant spaces and splits long lines on smaller chunks. The generated LaTeX code is legible enough for humans to read and modify." TeXML is published under the GPL.

The W3C XForms working group has posted the first public working draft of XForms 1.1. Changes since 1.0 include:

- A new namespace URI,

http://www.w3.org/2004/xforms/ power,luhn,currentandpropertyXPath extension functions- An e-mail address datatype

- An ID card number datatype

- A

duplicateaction element and a corresponding xforms-duplicate event - A

destroyaction element and a corresponding xforms-destroy event - An xforms-close event

- An xforms-submit-serialize event

- Inline rendition of non-text media types

Andy Clark has posted version 0.9.4 of his CyberNeko Tools HTML Parser for the Xerces Native Interface (NekoXNI) and version 0.2.2 of his ManekiNeko RelaxNG Validator. This new version of the HTML parser is mostly a bug fix release. The RELAX NG validator adds an option to set ability to set an entity resolver. CyberNeko is writen in Java. Besides the HTML parser and RELAX NG validator, CyberNeko includes a generic XML pull parser, a DTD parser, and a DTD to XML converter.

Adobe has posted an update to their SVG viewer plug-in for Windows that fixes a couple of bugs including a security hole. Everyone using this on Windows should upgrade. Other platforms are not affected.

Apparently Amsterdam was a success last year. XML Europe is now XTech and has settled in Amsterdam again. This year it will take place May 24-27, convenient for most academic schedules. The call for papers has been posted. I'll have to think of something to submit. Submissions are due by January 7.

Happy Fifth Birthday XSLT!

Altova GmbH has released the Altova XSLT 1.0 and 2.0 Engines and the Altova XQuery Engine. These are closed source and free-beer products for Windows 2000 and later. These are the same engines used in XMLSpy. The XQuery and XSLT 2.0 engines are not fully standards conformant. I'm not sure about the XSLT 1.0 engine, but any bugs in XMLSpy's XSLT are probably found here too.

The W3C the Voice Browser Working Group has posted a new working draft of Semantic Interpretation for Speech Recognition. According to the abstract,

This document defines the process of Semantic Interpretation for Speech Recognition and the syntax and semantics of semantic interpretation tags that can be added to speech recognition grammars to compute information to return to an application on the basis of rules and tokens that were matched by the speech recognizer. In particular, it defines the syntax and semantics of the contents of Tags in the Speech Recognition Grammar Specification.

Semantic Interpretation may be useful in combination with other specifications, such as the Stochastic Language Models (N-Gram) Specification, but their use with N-grams has not yet been studied.

The results of semantic interpretation describe the meaning of a natural language utterance. The current specification represents this information as an ECMAScript object, and defines a mechanism to serialize the result into XML. The W3C Multimodal Interaction Activity is defining a data format (EMMA) for representing information contained in user utterances. It is believed that semantic interpretation will be able to produce results that can be included in EMMA.

Opera Software has posted the third beta of version 7.6.0 of their namesake web browser for Windows. Opera supports HTML, XML, XHTML, RSS, WML 2.0, and CSS. XSLT is not supported. Other features include IRC, mail, and news clients and pop-up blocking. There are lots of little changes, bug fixes, and usability enhancements in 7.60. However major new features include speech-enabled browsing (including support for XHTML+Voice), medium-screen rendering, and inline error pages. Opera is $39 payware.

The W3C Synchronized Multimedia working group has posted a proposed edited recommendation of Synchronized Multimedia Integration Language (SMIL 2.0). According to the draft, "there are no substantial implementation issues arising as a result of this edition, which aims only to incorporate the published corrigenda to the first edition." Comments are due by December 5.

The Gnome Project has released version 2.6.16 of libxml2, the open source XML C library for Gnome. This release fixes various bugs.

Sun's released version 1.5 of the Java Web Services Developer Pack. This release adds "XML Web Services Security, a preview of the Sun Java Streaming XML Parser based on JSR 173, as well as updates to existing web services technologies previously released in the Java WSDP." The complete contents are:

- XML and Web Services Security v1.0

- XML Digital Signatures v1.0 EA2

- Sun Java Streaming XML Parser v1.0 EA

- Java Architecture for XML Binding (JAXB) v1.0.4

- Java API for XML Processing (JAXP) v1.2.6_01

- Java API for XML Registries (JAXR) v1.0.7

- Java API for XML-based RPC (JAX-RPC) v1.1.2_01

- SOAP with Attachments API for Java (SAAJ) v1.2.1_01

- JavaServer Pages Standard Tag Library (JSTL) v1.1.1_01

- Java WSDP Registry Server v1.0_08

- Ant Build Tool 1.6.2

- WS-I Attachments Sample Application 1.0 EA3

This should all run in Java 1.4 and later.

Michael Smith has posted version 1.67.0 of the DocBook XSL stylesheets. These support transforms to HTML, XHTML, and XSL-FO. Besides bug fixes, major enhancements in this release include:

- Enabled dbfo table-width on entrytbl in FO output

- Added support for role=strong on emphasis in FO output

- Added new FO parameter hyphenate.verbatim that can be used to turn on "intelligent" wrapping of verbatim environments.

- Replaced all <tt></tt> output with <code></code>

- Use strong/em instead of b/i in HTML output

- Added Saxon8 extensions

Peter Eisentraut has released version 1.79 of the DocBook DSSSL stylesheets. According to Euisentraut, "This is a maintenance release. It fixes a number of outstanding bugs and contains updated translations." New features include:

- The doctype declaration in the HTML output now contains a system identifier

- CSS decoration has been added to procedure steps.

- Uses of <VAR> in HTML output (often rendered in italic) have been

- changed to something more appropriate

- Admonition titles and contents are kept together.

- Programlistings with callouts now honor the width attribute.

- "pc" is now allowed as abbreviation for "pica".

- Bosnian and Bulgarian translations have been added.

Cladonia Ltd.has released the Exchanger XML Editor 3.0, a $98 payware Java-based XML Editor. Features include

- Schema Based Editing

- Tag Prompting

- Validation against DTD, XML Schema, RelaxNG

- Tree View and Outliner for Tag Free editing

- XPath and Regular expression searches

- Schema Conversion

- XSLT

- Project Management

- SVG Viewer and Conversion

- Easy SOAP Invocations

- Find in Files

- Extension Handling

- DTD editing

- XML catalogs

- RelaxNG and DTD based tag completion.

- XSLT Debugger

- XML Signature support

- Better performance with large documents

- WSDL Analyzer

- WebDAV and FTP support

- XInclude resolution

New features in version 3.0 include:

- Unordered XML Differencing and Merging,

- Content Folding

- Split Views

- User defined Keyboard Shortcuts

- Emacs Keyboard Shortcuts

- Multiple Tag-Completion Schemas

- Attribute Value Prompting

- Navigator with XPath Filters

The W3C XML Core Working Group has posted the second and last call working draft of xml:id Version 1.0. This describes an idea that's been kicked around in the community for some time. The basic problem is how to link to elements by IDs when a document doesn't have a DTD or schema. The proposed solution is to predefine an xml:id attribute that

would alays be recognized as an ID, regardless of the presence or absence of a DTD or schema.

The W3C XML Binary Characterization Working Group has published the third working draft of XML Binary Characterization Use Cases. Apparently the one they posted five days ealrier had "some obsolete content. This new publication is meant to reflect the up-to-date state of the document, it is recommended not to read the previous version."

![]() The Mozilla Project has released

Firefox 1.0,

the open source web browser that is rapidly gaining on

Internet Explorer. Firefox supports HTML, XHTML, CSS, and XSLT.

MathML and SVG aren't supported out of the box, but can be added.

The Mozilla Project has released

Firefox 1.0,

the open source web browser that is rapidly gaining on

Internet Explorer. Firefox supports HTML, XHTML, CSS, and XSLT.

MathML and SVG aren't supported out of the box, but can be added.

In my continuing efforts

to make XML dead-bang easy to manipulate with Java,

I've posted beta 7 of XOM, my dual streaming/tree API for processing XML with Java. This release fixes a few bugs and approximately doubles the performance of a few common operations including

getValue(), toXML(), DOM and SAX conversion, canonicalization, and XSL transformation.

This is the first release candidate. There are still a few open issues with regard to error handling in XInclude that require clarification from the XInclude working group. If they decide that how XOM currently behaves is correct, then XOM 1.0 is essentially complete. If they decide to require different behavior, a few changes may yet need to be made.

Skipping right over candidate recommendation (I guess they think this has already been implemented), the W3C Technical Architecture Group (TAG) has posted the proposed recommendation of Architecture of the World Wide Web, First Edition. Quoting from the abstract:

The World Wide Web uses relatively simple technologies with sufficient scalability, efficiency and utility that they have resulted in a remarkable information space of interrelated resources, growing across languages, cultures, and media. In an effort to preserve these properties of the information space as the technologies evolve, this architecture document discusses the core design components of the Web. They are identification of resources, representation of resource state, and the protocols that support the interaction between agents and resources in the space. We relate core design components, constraints, and good practices to the principles and properties they support.

It's pretty good stuff overall. Everyone working on the Web, the Semantic Web, or with XML or URIs should read it. Even a cursory skim reveals a few surprises. For instance, apparently URIs with fragment identifiers are now considered to be full-fledged URIs, not just URI references (When did that change?) and there's no actual syntax for fragment identifiers for URIs that point to XML documents. (What happened to XPointer?) Comments are due by December 3.

The W3C XSL Working Group has published the last call working draft of XSL Transformations (XSLT) Version 2.0. According to the draft, more significant changes since the previous XSLT 2 draft include:

-

A new attribute,

use-when, allows compile-time conditional inclusion of sections of the stylesheet depending on the processing environment (for example, for schema-aware or non-schema-aware processing) -

A switch,

input-type-annotations, defines whether the stylesheet expects source data to have been validated and annotated by a schema processor. -

A new instruction

xsl:documentis provided, to construct a document node. -

Serialization attributes can now be specified (dynamically) on the

xsl:result-documentinstruction. -

A schema can now be included inline within the

xsl:import-schemadeclaration.

The W3C XML Binary Characterization Working Group has published the second working draft of XML Binary Characterization Use Cases. This divides roughly 50-50 into things that should be done in plain vanilla XML (Web Services for Small Devices, Web Services as an Alternative to CORBA, Electronic Documents, FIXML) and things that should not be done in anything remotely like XML (Floating Point Arrays in the Energy Industry, PC-free Photo Printing). In brief, they're trying to turn a station wagon into a Ferrari, and instead they're going to end up with an Edsel. Despite the hype XML is not, cannot, and will not be all things to all people. At best this effort will fail. At worst, it will fail and take down XML with it.

A few of the use cases (Embedding External Data in XML Documents, PC-free Photo Album Generation) demonstrate legitmate needs to bundle binary data with XML. However, they're doing it inside out. The XML and the binary data should be combined in a non-XML envelope like XOM proposes, rather than forcing the binary's square pegs into XML's round holes.

Wolfgang Meier of the Darmstadt University of Technology has posted the second beta of eXist 1.0, an open source native XML database that supports fulltext search. XML can be stored in either the internal, native XML database or an external relational database. The search engine supports XPath and XQuery. The server is accessible through HTTP and XML-RPC interfaces and supports the XML:DB API for Java programming.

According to Meier, "This release benefits from a lot of testing done by other projects, and fixes many instabilities and database corruptions that were still present in the previous version. In particular, the XUpdate implementation should now have reached a stable state. Concurrent XUpdates are fully supported. The XQuery implementation has matured, adding support for collations, computed constructors, and more. Module loading has been improved, allowing more complex web interfaces to be written entirely in XQuery (see new admin interface). Finally, there's a new WebDAV module, a reindex/repair option and support for running eXist as a system service." eXist is published under the LGPL.

MetaStuff Ltd. has released dom4j 1.5.1, a tree-based API for processing XML with Java. dom4j is based on interfaces rather than classes, which distinguishes it from alternatives like JDOM and XOM (Not to its credit, in my opinion. Using concrete classes instead of interfaces was one of the crucial decisions that made JDOM as simple as it is.) Version 1.5/1.5.1 seems to be mostly a collection of bug fixes and small, backwards compatible, API enhancements. It improves compliance to the DOM interfaces and adds support for StAX.

dom4j is published under a BSD license. However, it uses code form the GNU Classpath extension Project (specifically the Ælfred parser) in a manner incompatible with its license, and it really should be published under the GPL as a result. Because dom4j's own BSD license is incompatible with dom4j, any distribution of dom4j must violate either the copyright of MetaStuff or the copyright of the Free Software Foundation. You might be able to cure this for your own distribution by removing the org.dom4j.aelfred and org.dom4j.aelfred2 packages from your own code base, and linking to unmodified copies of GNU JAXP instead. However there might be other license mines in other parts of the code base. dom4j has a long history of ignoring other projects' licenses—it started life as an illegal fork of JDOM, though that has since been cured—and it wouldn't surprise me in the least to find more misappropriated code in other packages.

The W3C XML Protocol Working Group has published the last call working draft

Assigning Media Types to Binary Data in XML. This spec attempts to preserve the original MIME media type of Base-64 encoded binary data stuffed in an XML element.

The mechanism by which this happens

is an xmlmime:contentType attribute for indicating

the media type of XML element content whose type is xs:base64Binary. It also defines an expectedMediaType for use in schema annotations

to indicate what the contentType attribute may say.

The W3C the Timed Text (TT) Working Group has posted the first public working draft of Timed Text (TT) Authoring Format 1.0 – Distribution Format Exchange Profile (DFXP). According to the abstract,

This document specifies the distribution format exchange profile (DFXP) of the timed text authoring format (TT AF) in terms of a vocabulary and semantics thereof.

The timed text authoring format is a content type that represents timed text media for the purpose of interchange among authoring systems. Timed text is textual information that is intrinsically or extrinsically associated with timing information.

The distribution format exchange profile is intended to be used for the purpose of transcoding or exchanging timed text information among legacy distribution content formats presently in use for subtitling and captioning functions.

The W3C Voice Browser working group has posted Pronunciation Lexicon Specification (PLS) Version 1.0 Requirements. According to the abstrat, "This document is part of a set of requirements studies for voice browsers, and provides details of the requirements for markup used for specifying application specific pronunciation lexicons. Application specific pronunciation lexicons are required in many situations where the default lexicon supplied with a speech recognition or speech synthesis processor does not cover the vocabulary of the application. A pronunciation lexicon is a collection of words or phrases together with their pronunciations specified using an appropriate pronunciation alphabet."

The W3C XQuery and XSLT Working Groups have updated five working drafts:

- XQuery 1.0 and XPath 2.0 Data Model

- XQuery 1.0 and XPath 2.0 Functions and Operators

- XML Path Language (XPath) 2.0

- XQuery 1.0: An XML Query Language

- XSLT 2.0 and XQuery 1.0 Serialization

The XSLT 2 working draft hasn't been updated for the second time in a row now. I'm not sure what's holding it up.

Most of the changes in XPath 2.0 in this draft seem to be editorial, more aimed at tightening up the spec than on changing the language itself. Some of the more substantive changes include::

-

SequenceType syntax has been simplified. SchemaContextPath is no longer part of the SequenceType syntax.

-

xdt:untypedAnyhas changed toxdt:untyped. -

xs:anyTypeis no longer abstract, and is used to denote the type of a partially validated element node. -

Value comparisons return

()if either operand is(). -

The precedence of the

cast,treat, and unary arithmetic operators has been increased. -

A new component has been added to the static context: context item static type.

Most of the changes in XQuery are a little more substantive and include::

-

The last step in a path expression can return a sequence of atomic values or a sequence of nodes (mixed nodes and atomic values are not allowed.)

-

A value of type xs:QName is now defined to consist of a "triple": a namespace prefix, a namespace URI, and a local name. Including the prefix as part of the QName value makes it possible to cast any QName into a string when needed.

-

Local namespace declarations have been deleted from computed element constructors. No namespace bindings may be declared by a computed element constructors.

-

The Prolog has been reorganized into three parts which must appear in this order: (a) Setters; (b) Namespace declarations and module and schema imports; (c) function and variable declarations.

-

A new "inherit-namespaces" declaration has been added to the Prolog, and "namespace inheritance mode" has been added to the static context.

-

An "encoding" subclause has been added to the Version Declaration in the Prolog.

-

A new declaration has been added to the Prolog to control the query-wide default handling of empty sequences in ordering keys ("empty greatest" or "empty least".)

-

In the static context, "current date" and "current time" have been replaced by "current dateTime", which is defined to include a timezone.

-

Computed comment constructors now raise an error rather than trying to "fix up" a malformed comment by inserting blanks.

-

The

divoperator can now divide two yearMonthDurations or two dayTimeDurations. In either case, the result is of typexs:decimal. -

Support for XML 1.1 and Namespaces 1.1 have been bundled together and defined as an optional feature. Various aspects of query processing and serialization that depend on this optional feature have been identified.

-

Cyclic module imports are no longer permitted. A module M may not import another module that directly or indirectly imports module M.

-

The application/xquery MIME media type has been defined.

The Gnome Project has released version 2.6.15 of libxml2, the open source XML C library for Gnome. This release fixes various bugs including a couple of security issues. It also improves the XInclude error reports, adds some convenience functions to the Reader API, and supports processing instructions in HTML. They've also released version 1.1.12 of libxslt, the GNOME XSLT library for C and C++. This is a bug fix release.

The W3C XML Schema working group has released the second edition of the W3C XML Schema specifications. This is not a new language, The new specs just incorporate various errata found in the original specs since their publication a few years ago. The spec is still divided into three parts: Part 0: Primer, Part 1: Structures, and Part 2: Datatypes.

Opera Software has posted the second beta of version 7.6.0 of their namesake web browser for Windows. Opera supports HTML, XML, XHTML, RSS, WML 2.0, and CSS. XSLT is not supported. Other features include IRC, mail, and news clients and pop-up blocking. There are lots of little changes, bug fixes, and usability enhancements in 7.60. However major new features include speech-enabled browsing (including support for XHTML+Voice), medium-screen rendering, and inline error pages. Opera is $39 payware.

The W3C Scalable Vector Graphics Working Group has posted the last call working draft of Scalable Vector Graphics (SVG) 1.2. Non-editorial changes in this draft include:

requiredFormatstest attributerequiredFontstest attribute- "Various updates to SVGGlobal (formerly SVGWindow), including removal of documentStyleSheet and evt attributes, addition of screen and location attributes, addition of navigation method, addition of mouse capture and merging of existing new methods into the interface."

- Return type of SVGImage::getPixel is now SVGColor.

- Synchronization attributes imported from SMIL 2

- # Added background-fill-opacity property.

- Changed

compositingattribute toclipout - New Selection interfaces

- "Auto" textLength

animationelement for displaying animated vector content.- Added "overflow" and "underflow" events for flow regions.

- Ogg Vorbis is required. No video formats are required.

- Event notification for shape changes, and event notification for rendering bounding box modifications.

- Mouse wheel events

- Two new methods on SVGLocatable for obtaining rendered bounds.

Kiyut has released Sketsa 2.2, a $29 payware SVG editor written in Java. Java 1.4.1 or later is required.

Martin Duerst has submitted a draft of The Archived-At Message Header Field. Briefly this proposes adding a semi-permanent URL for each e-mail message posted to a mailing list to the e-mail header. This is an insanely good idea, that I wish everyone would start using immediately. The W3C mailing lists already use X-Archived-At for this purpose. I just wish more lists would follow suit.

The Apache Software Foundation has published The Common Gateway Interface (CGI) Version 1.1, an informational RFC that describes "'current practice' parameters of the 'CGI/1.1' interface developed and documented at the U.S. National Centre for Supercomputing Applications. This document also defines the use of the CGI/1.1 interface on UNIX(R) and other, similar systems."

Jiri Pachman has written fo2wordml, a stylesheet to convert XSL-FO to Microsoft's WordprocessingML format. (This is the second such tool for that I've seen in the last couple of weeks. I'm amazed this is even possible.)

Sébastien Cramatte has posted xslt2Xforms 0.7, an XSLT stylesheet that adds W3C XForms support to a web browser using XHTML, Javascript and CSS. This release only works in Mozilla.

Norm Walsh has written a draft of XML Chunk Equality, an attempt to decide when two XML infosets are and are not equal.

William F. Hammond has posted gellmu 0.8.0.5, "a LaTeX-like way to produce article-level for online display in the modern, fully accessible, form of HTML extended by the World Wide Web Consortium's Mathematical Markup Language (MathML)."

Dave Beckett has released the Raptor RDF Parser Toolkit 1.4.0, an open source C library for parsing the RDF/XML, N-Triples. Turtle, and Atom Resource Description Framework formats. It uses expat or libxml2 as the underlying XML parser. Version 1.40 can serialize RDF triples into RDF/XML and N-Triples and adds RSS enclosure support to the RSS tag soup parser. Raptor is dual licensed under the LGPL and Apache 2.0 licenses.

XMLmind has released version 2.8 of their XML Editor. This $220 payware product features word processor and spreadsheet like views of XML documents. A free-beer hobbled version is also available.

The xframe project has posted beta 5 of xsddoc, an open source documentation generator for W3C XML Schemas based on XSLT. xsddoc generates JavaDoc-like documentation of schemas. Java 1.3 or later is required.

Recordare has released Dolet 2.0, an $89.95 payware Mac OS X Finale plug-in for reading and writing MusicXML files. Java 1.4, Finale 2004 or 2005, and Mac OS X are required.

Adam Souzis has released Rx4RDF 0.4.1, a set of technologies designed to make the Resource Description Framework (RDF) measier to use. It includes:

- RxPath for querying, transforming and updating RDF by specifying a deterministic mapping of the RDF model to the XPath data model

- ZML, a Wiki-like text formatting language that lets you write arbitrary XML or HTML

- RxML, yet another alternative XML serialization for RDF, this one designed for easy authoring in ZML

- Raccoon, a simple application server that uses an RDF model for its data store

- Rhizome is a content management and delivery system that runs on Raccoon.

- RDFScribbler, a web application that can display and edit any arbitrary RDF model using RxSLT and RxUpdate.

XMLMind has released the XMLmind FO Converter 2.0, an XSL-Formatting Objects to RTF converter written in Java. Version 2.0 adds the ability to convert XSL-FO documents to Microsoft's WordprocessingML format. The personal edition is free-beer. The professional edition adds an API for interacting with the product and costs $550.

Norm Walsh has posted the

second candidate release of DocBook 4.4,

an XML application designed for technical documentation

and books such as Processing XML with Java. New elements in DocBook 4.4 include

package and biblioref. This CR release fixes bugs and

adds "wordsize as a global effectivity attribute" (whatever that means). He's also posted the second candidate release of simplified DocBook 1.1.

x-port.net has released of formsPlayer 1.1, a free-beer (e-mail address required) XForms processor that "only works in Microsoft's Internet Explorer version 6 SP 1." Version 1.1 can dynamically set any aspect of submission "from instance data, including URLs and HTTP headers, making it possible to implement clients that use protocols like SOAP, Atom over SOAP or REST, WebDAV, and so on." It also fixes assorted bugs.

Karl Waclawek has released SAX for .NET 1.0, an open source port of the Java SAX API to C# and .NET 1.1. An implementation of this API based on expat is available, but is not compatible with Mono 1.0.2.

YesLogic has released Prince 4.0, a $295 payware batch formatter for Linux and Windows that produces PDF and PostScript from XML documents with CSS stylesheets. New features in 4.0 include XHTML style and link elements, PDF compression and Font Embedding, Automatic table layout, Shrink-to-fit floats, block alignment and negative margins, and word-breaking at soft hyphens.

I've posted the notes from last night's Effective XML presentation to the XML Developer's network of the Capitol District, where a good time was had by all. Compared to my usual notes, these are a little sparse. Most of the material is covered in much greater depth in the book. I'm next scheduled to give this talk at Software Development 2005 West in March, but if they're any user groups or conferences that would like to hear it before then, send me e-mail, and we'll see what we can do.

Yesterday evening I was doing some programming with DOM, when I was reminded of the importance of failing fast (as well as just how much I hate DOM). I was running the XInclude Test Suite across my DOMXIncluder and logging the results to a simple, record-like XML document. The format for the output was suggested by the XInclude working group, but it's quite simple: no namespaces; nothing fancy. It looks like this:

<?xml version="1.0" encoding="UTF-8"?>

<testresults processor="com.elharo.xml.xinclude.DOMXIncluder">

<testresult id="imaq-include-xml-01" result="pass"/>

<testresult id="imaq-include-xml-02" result="pass"/>

<testresult id="imaq-include-xml-03" result="skipped">

<note>DOMXIncluder does not support the xpointer scheme</note>

</testresult>

<testresult id="imaq-include-xml-04" result="pass"/>

<testresult id="imaq-include-xml-05" result="pass"/>

<testresult id="imaq-include-xml-06" result="fail"/>

...

One of the things I logged into the document was exception messages encountered when running any one of the 150 or so tests. Somewhere along the line one or more of the exception messages I logged was null or contained an XML illegal character such as a form feed. However, I'm still not sure which ones because DOM doesn't actually complain if you create a text node with malformed data that cannot possibly be serialized. Quite a while later, when I was serializing the document, the serializer complained and died with an unhelpful error message that didn't actually tell me where to find the problem. (I tried two serializers. Apache's XMLSerializer complained about a bad character, but didn't tell me what the character was or where it appeared. JAXP's ID transform simply generated a blank document without any error message.) As a kludgy fix, for the time being I've stopped logging the exception messages into DOM.

The problem is not that the exception messages contained illegal characters. If I had been informed of this, it would have been trivial to work around it. The problem was that DOM didn't bother checking for this, and blindly created a malformed document. XOM would have caught the error immediately when it happened, rather than waiting for the entire document to be serialized. It would have pinpointed exactly where the problem was so I could fix it. Draconian error handling is a feature, not a bug. It is the API's responsibility to detect bad input. It must not rely on client programmers to provide correct data. Even when the programmers are experts who really do know all the ins and outs of which input is legal, they may not be creating the input by hand. They are often passing in data from another source that has no idea it is talking to an API with particular preconditions. Precondition verification is a sine qua non for robust, published APIs; and it is a sine qua non that DOM fails to implement.

Tomorrow evening (Tuesday) I'll be talking about Effective XML at the XML Developers Network of the Capital District in Albany New York. The meeting runs from 6:00 to 8:00 P.M. Everyone's invited.

Planamesa Software has posted the second alpha of NeoOffice/J 1.1, a Mac OS X variant of OpenOffice that replaces X-Windows with Java Swing. This release "supports the features in OpenOffice.org 1.1.2 including faster startup, right-to-left and vertical text editing, and the ability to save documents directly to PDF." I wrote the original version of the Effective XML presentation in OpenOffice on Linux, which proved not up to the task so I eventually moved it to PowerPoint. I'll check this out and see if maybe I can use NeoOffice/J for tomorrow night's presentation, but no promises.

OK: verdict's in. That was quick. This product is definitely not ready for prime time, at least in the presentation component. As soon as I opened my PowerPoint slides, NeoOffice/J seemed to get stuck in an infinite flashing loop of draw and redraw. At least it let me quit, but I couldn't do anything else. I will be using PowerPoint tomorrow night.

The GEO (Guidelines, Education & Outreach) Task Force of the W3C Internationalization Working Group (I18N WG) has published the first public working draft of Authoring Techniques for XHTML & HTML Internationalization: Specifying the language of content 1.0. The table of contents provides a various nice summary of the rules:

- Always declare the default text processing language of the page, using the html tag, unless there are more than one primary languages.

- Consider using a Content-Language declaration in the HTTP header or a meta tag to declare metadata about the primary language of a document.

- Do not use Content-Language to declare the default text processing language, and do not use language attributes to declare the primary language metadata.

- Do not declare the language of a document in the body tag.

- If you are using Content-Language to indicate the primary language metadata when there are multiple primary languages, provide a comma-separated list of all primary language tags.

- For documents with multiple primary languages, decide whether you want to declare a single text processing language in the html tag, or leave it undefined.

- For documents with multiple primary languages, try to divide the document at the highest possible level, and declare the appropriate text processing language in those blocks.

- Use the

langand/orxml:langattributes around text to indicate any changes in language. - For HTML use the lang attribute only, for XHTML 1.0 served as text/html use the lang and xml:lang attributes, and for XHTML served as XML use the xml:lang attribute only.

- Follow the guidelines in RFC3066 for language attribute values.

- Use the two-letter ISO 639 codes for the language code where there are both 2- and 3-letter codes.

- Consider using the codes zh-Hans and zh-Hant to refer to Simplified and Traditional Chinese, respectively.

- When pointing to a resource in another language, consider the pros and cons of using CSS to indicate the language, based on the value of the hreflang attribute of the a element.

- If using CSS to generate a language marker from the hreflang attribute, do not use flag icons to indicate languages.

Antenna House, Inc has released XSL Formatter 3.2 for Linux and Windows.

This tool converts XSL-FO files to PDF. Newly supported XSL-FO

properties in 3.2 include

alignment-adjust ,

alignment-baseline ,

dominant-baseline ,

glyph-orientation-horizontal, and

glyph-orientation-vertical .

New features in 3.2 include MathML support,

WordML transformation, XSL Template designer integration, and

end user defined and private use characters

The lite version costs $300 and up on Windows and $900 and up on Linux/Unix, but is limited to 300 pages per document.

Prices for the uncrippled version start around $1250 on Windows and $3000 on Linux/Unix.

David Holroyd has updated his

a CSS2 DocBook stylesheet to version 0.3. Thus stylesheet

that enables CSS Level 2 savvy web browsers

such as Mozilla and Opera to display Docbook XML documents. The results aren't as pretty as what the XSLT stylesheets can produce, but they're serviceable.

This release makes a number of small improvements including support for

ulink, productname, and important.

SyncroSoft has released verison 5.0 of the <Oxygen/> XML editor. Oxygen supports XML, XSL, DTDs, and the W3C XML Schema Language. New features in version 5.0 include an XSLT 2.0 Editor and Debugger, XPath 2.0 evaluator, XQuery Editor, WSDL Editor, SOAP Analyzer, and SVG Viewer. It costs $128 with support. Upgrades from previous versions are $76.

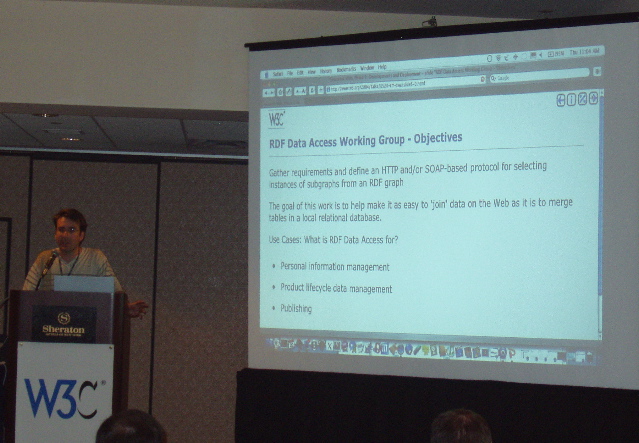

The W3C RDF Data Access Working Group has published the first public working draft of SPARQL Query Language for RDF. According to the introduction,

An RDF graph is a set of triples, each consisting of a subject, an object, and a property relationship between them as defined in RDF Concepts and Abstract syntax. These triples can come from a variety of sources. For instance, they may come directly from an RDF document. They may be inferred from other RDF triples. They may be the RDF expression of data stored in other formats, such as XML or relational databases.

SPARQL is a query language for accessing such RDF graphs. It provides facilities to:

- extract information in the form of URIs, bNodes, plain and typed literals.

- extract RDF subgraphs.

- construct new RDF graphs based on information in the queried graphs.

Here's a simple example SPARQL query adapted from the draft:

PREFIX dc: <http://purl.org/dc/elements/1.1/> PREFIX : <http://example.org/book/> SELECT ?var WHERE ( :book1 dc:title ?var )

The ? indicates a variable name. This query stores the

title of a book in a

variable named var. There are boolean and numeric operators as well.

Happy Tenth Birthday Netscape!

The RDF Data Access Working Group has published the third public working draft of RDF Data Access Use Cases and Requirements. According to the introduction,

The W3C's Semantic Web Activity is based on RDF's flexibility as a means of representing data. While there are several standards covering RDF itself, there has not yet been any work done to create standards for querying or accessing RDF data. There is no formal, publicly standardized language for querying RDF information. Likewise, there is no formal, publicly standardized data access protocol for interacting with remote or local RDF storage servers.

Despite the lack of standards, developers in commercial and in open source projects have created many query languages for RDF data. But these languages lack both a common syntax and a common semantics. In fact, the extant query languages cover a significant semantic range: from declarative, SQL-like languages, to path languages, to rule or production-like systems. The existing languages also exhibit a range of extensibility features and built-in capabilities, including inferencing and distributed query.

Further, there may be as many different methods of accessing remote RDF storage servers as there are distinct RDF storage server projects. Even where the basic access protocol is standardized in some sense—HTTP, SOAP, or XML-RPC—there is little common ground upon which to develop generic client support to access a wide variety of such servers.

The following use cases characterize some of the most important and most common motivations behind the development of existing RDF query languages and access protocols. The use cases, in turn, inform decisions about requirements, that is, the critical features that a standard RDF query language and data access protocol require, as well as design objectives that aren't on the critical path.

RenderX has released version 4.0 of XEP, its payware XSL Formatting Objects to PDF and PostScript converter. XEP also supports part of Scalable Vector Graphics (SVG) 1.1. It's not immediately clear what, if anything, is new in 4.0. The basic client is $299.95. The developer edition with an API is $999.95. The server version is $3999.95. Updates from 3.0 range from free to full-price depending on when you bought it.

The W3C Web Services Choreography Working Group has posted the second public working draft of Web Services Choreography Description Language Version 1.0. According to the abstract,

The Web Services Choreography Description Language (WS-CDL) is an XML-based language that describes peer-to-peer collaborations of parties by defining, from a global viewpoint, their common and complementary observable behavior; where ordered message exchanges result in accomplishing a common business goal.

The Web Services specifications offer a communication bridge between the heterogeneous computational environments used to develop and host applications. The future of E-Business applications requires the ability to perform long-lived, peer-to-peer collaborations between the participating services, within or across the trusted domains of an organization.

The Web Services Choreography specification is targeted for composing interoperable, peer-to-peer collaborations between any type of party regardless of the supporting platform or programming model used by the implementation of the hosting environment.

Tatu Saloranta has posted WoodStox 1.0, a free-as-in-speech (LGPL) non-validating XML processor written in Java that implements StAX API. "StAX specifies interface for standard J2ME 'pull-parsers' (as opposed to "push parser" like SAX API ones); at high-level StAX specifies 2 types (iterator and event based) readers and writers that used to access and output XML documents." WoodStox supports XML 1.0 and 1.1.

The W3C XML Binary Characterization Working Group has posted the first public working draft of XML Binary Characterization Properties. This describes the goals/hopes/dreams the group has for a binary format to replace XML. These include:

- Accelerated Sequential Access

- Byte Preserving

- Compact

- Data Model Versatility

- Efficient Update

- Embedding of arbitrary files

- Encryptable

- Extensible at the format level

- Fragmentable

- Hinting (I have no idea what this is, and neither does the draft)

- Human Readable/Editable/Deducible

- Integratable into the Web

- Integratable into XML Family

- No Arbitrary Limits

- Fast

- Random Access

- Robust

- Round Trippable