I've installed a server side spam filter. Hopefully this will bring my e-mail load down to a manageable level, even when I'm on a low-speed connection or accessing my e-mail via pine. On the flip side, even though it's set to a very conservative level, it's now more likely that I'll miss a few messages since it's much harder to check my spam folder on the server than on the client. If you send something important to me, and you expect a response and don't get it, it might be worth contacting me in some other way. It's funny how dependent we've become on e-mail. John Cowan and I are in the same building for a few days (a relatively rare event) and we're still trading e-mail with each other while tracking down a buggy interaction between XOM and TagSoup.

Murphy's Law strikes again. Five minutes after I wrote the last entry, I'm at the coffee break and John Cowan comes up to me and says, "I got your e-mail but I didn't understand it. What did you mean?" We straightened it out quickly. Sometimes it pays to be physically present. :-)

The second day of Extreme Markup Languages 2004 began with Ian Gorman's presentation on GXParse, about which I'm afraid I can't say too much because I was busy getting ready for my own presentation on XOM Design Principles which was, I'm happy to say, well received. The big question from the audience was whether Java 1.5 and generics changed any of this. The answer is that XOM needs to support Java 1.4 (and indeed 1.3 and 1.2) so generics are not really an option. If I were willing to require Java 1.5 for XOM, the answer might be different. Still it might not be because the lack of type safety in generics is a big problem.

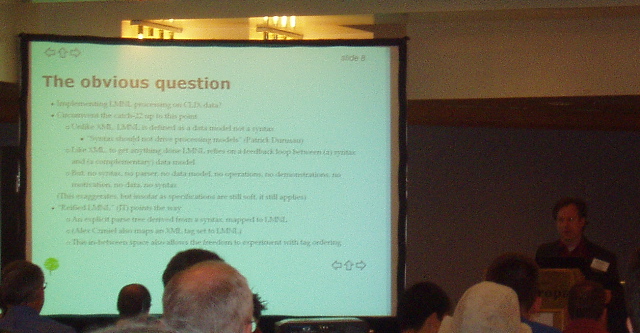

Following the coffee break, B. Tommie Usdin is giving Steve DeRose's paper (Steve wasn't here for some reason) on Markup overlap: A review and a horse. It was very entertaining, even if Usdin and the audience didn't always agree on what DeRose was actually trying to say in his paper. This amusing session was followed by Wendell Piez (who is here) delivering his own talk on Half-steps toward LMNL (Layered Markup and Annotation Language).

The question in both of these papers (and a couple of earlier sessions I missed while in the other track) is how to handle overlapping markup such as

<para>David said,

<<quote>I tell you, I was nowhere near your house.

I've never been to your house!

I don't know who took your cat.

I don't even know what your cat looks like.

</para>

<para>

Why are you accusing me of this anyway?

It's because you don't like my dog, isn't it?

You've never liked my dog!

You're a dog hater!

</quote>.

At that moment, David's bag began to roll around on the floor and meow.

</para>

Apparently, this sort of structure shows up frequently in Biblical studies.

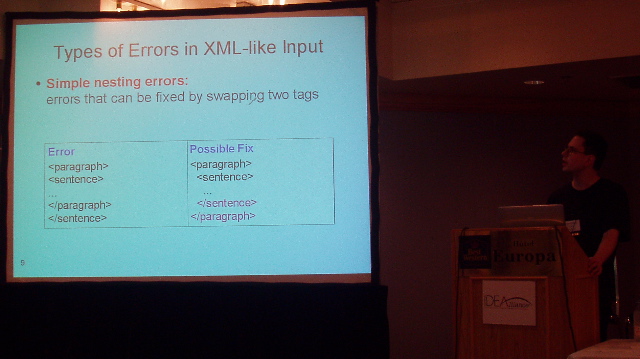

The afternoon begins with a session that looks quite interesting. Christian Siefkes is scheduled to talk about "A shallow algorithm for correcting nesting errors and other well-formedness violations in XML-like input." According to the abstract,

There are some special situations where it can be useful to repair well-formedness violations occurring in XML-like input. Examples from our own work include character-level and simple nesting errors, widowed tags, and missing root elements. We analyze the types of errors that can occur in XML-like input and present a shallow algorithm that fixes most of these errors, without requiring knowledge of a DTD or XML Schema.

I tried to do something like this with XOM and eventually decided it was just too unreliable. You couldn't be sure you were inserting the missing end-tags in the right places. I'm curious to see how or whether he's addressed this problem.

XML has "The most conservative appproach to error handling I have ever heard of." The idea is to repair the errors at the generating side, not the receving side, because different receivers might repair it differently. (Right away that's a difference with what I was trying in XOM.) XML-like input is input that is meant to be XML, but may be malformed. Possible errors include:

He's interested mostly in errors caused by programs that automatically add markup to existing documents by linguistic analysis on plain text. But also by errors caused by human authoring.

When fixing tags, need to choose heuristics. For instance, should the end-tag for a widowed start-tag be placed immediately after the start-tag or as far away as possible?

A mutlipass algorithm. The first pass tokenizes and fixes character errors such as unescaped < signs and missing quotes on attribute values. Second pass fills in missing tags. It's not always possible to do this perfectly. Overall, this looks mildly useful, but there's nothing really earth-shattering here.

For the second session of the afternoon, I swapped roooms away from a session that smelled of the semantic web into a more user-interface focused session delivered by Y. S. Kuo (co-authors N. C. Shih, Lendle Tseng, and Jaspher Wang) on "Avoiding syntactic violations in Forms-XML". Thi seems to be about some sort of XML editing forms toolkit.

Current XML editors provide a text view, a tree view, and/or a presentation view. He thinks only the presentation view is appropriate for end users. I tend to agree. He thinks narrative-focused XML editors are more mature than editors for record-like documents so he's going to concentrate on the latter. Syntactic constraints are independent of the user interface layout.

The final session of the day is a panel discussion of "Update on the Topic Map Reference Model." Hard as it is to believe, Patrick is not wearing the strangest hat at this conference.

First up is Lars Marius Garshol. In the beginning there was no topic map model. Topic maps use XLinks. PMTM4, a graph based model with three kinds of modes vs. the infoset based model. These two models were trying to do completely different things. Now there are two different models, reference Model and Data Model. No user will ever interact with the model directly. The model is marketing machinery.

Now Patrick Durusau. "The goal is to define what it means to be a 'topic map', independent of implementation detail or data model concerns" so that user of different implementations can merge their topic maps. They want more people to join the mailing list and give input.

Tommie Usdin wants to know if when the panel sees something they always all agree on whether it is or is not a topic map. The answer appears to be no, they do not know one when they see it, at least if this requires agreeing on what is and isn't a topic map, even though there is an ISO standard that describes this.

John Cowan is delivering a nocturne on TagSoup, a SAX2 parser for ugly, nasty HTML. It processes HTML as it is, not as it should be. "Almost all data is ugly legacy data at any given time. Fashions change." However, this does not work with XHTML! Empty-element tags are not supported. TagSoup is driven by a custom schema in a custom language. It generates well-formed XML 1.0. It does not guarantee namespace well-formedness. TSaxon is a fork of Saxon 6.5.3 that bundles TagSoup. Simon St. Laurent: "It's nice when people give you the same crap over and over instead of different crap." Cowan demoed TagSoup over a bunch of nasty HTML people submitted on a poster over the last couple of days. It mostly worked, with one well-formedness error (an attribute named 4).