Good morning. Welcome to day 3 of Extreme. If you're only reading this in RSS, or in one of those browsers/aggregators that can't handle the full panoply of correct RSS <Cough>Planet XMLHack</Cough>, <Cough>Artima</Cough>, you're missing a lot. Come visit the site. And to answer one frequently asked question I'll do a full text feed for this site at such time as someone writes an RSS client that gives a user experience at least equal to a real Web browser. That means at a minimum:

That's not everything, but those are some of the most common problems I've encountered; and I've tried pretty much everything that's out there. If a product satisfies criterion 1 (open source) and is written in a language and on top of a toolkit I'm comfortable with and gets pretty close these requirements, I'd even dedicate some time to filling in the last holes. Sage is probably the closest I've come to what I want, but it's not quite there yet; and I really don't have any experience hacking on XUL. I did submit one patch to fix its font size problems, but the remaining missing features exceed my ability to fix in the time I have available.

I don't have any particular reason to prefer HTML to Atom/RSS for this site. There aren't any ads, and I rarely bother to look at my traffic stats; but I'm not going to put any effort into creating full-content feeds until there's a browser that can handle it.

This morning starts with two sessions on overlap, a perennial favorite topic here at Extreme. In fact, a brief comment I made here on Tuesday while listening to the keynotes elicited a rather lengthy explanation from one of the conference co-chairs on the conference Wiki.

This is the topic that justifies calling this conference Extreme Markup instead of Extreme XML. The overlap problem arises when things don't fit neatly into trees. For instance, suppose a quotation spans several paragraphs but begins and ends in the middle of two different paragraphs. How do you handle that? There are a number of approaches, none of them really satisfying. I wrote a little about this in Effective XML, but everything suggested there was really just a hack. One common approach is to use empty-element tags as pseudo-start and end-tags connected by attributes as in this example adapted from Syd Bauman's talk this morning:

<line>And I said to him, <quote startID="q2"/>Superman, have you not seen,</line>

<line>The embarrassment havoc I'm wreaking?<quote endID="q2"/></line>The bottom line is that overlap is just not something XML is designed to support. Really handling overlap requires a different markup language. One popular choice is Wendell Piez and Jeni Tennison's LMNL (pronounced "liminal") which stands for "Layered Markup and Annotation Language." Jeni isn't here this year, but Paul Caton is and will be talking about LMNL in the second session this morning.

Overlap tends to arise more in analysis of text rather than authoring of text. Overlap is a particular problem for scholars doing textual analysis of the Bible. Shakespeare, etc. I suspect Syd Bauman will be addressing this in the first session this morning, "TEI HORSEing around: Handling overlap using the Trojan Horse method." Overlap does not seem to be such a big deal for original authors of new work since it's normally possible to fit most content into reasonable trees without a great deal of effort, as long as you think about it up front. And even if you don't it still doesn't tend to come up that often. The author has one view of the document that does fit pretty well into a tree most of the time. For textual analysis, however, different scholars are going to want to ask different questions and thus form different trees from the same text. (As Walter Perry noted yesterday, the intent of the author is not necessarily and should not necessarily be respected by the reader.) For instance, one use case might require marking up text by speaker and another by verse. Do we need to have both trees in the same document, or is the real solution a way of combining and applying different trees over the same text?

However, there's one other use case for overlapping markup; and this is a real practical killer application, even for those of us who don't analyze classical Greek and Hebrew corpora for a living. That use case is change control and revision markers. The current state of the art for change control is CVS and Subversion, which just treat XML like any other text files. I'm not sure what the state of the art is for XML change markers; probably whatever's in OpenOffice.org's format or Microsoft's WordprocessingML. I'll have to take a look at that and see how they handle it. But whatever they do, I bet it's a kludge. I don't think there's a good solution for this problem within the bounds of standard XML.

Why does an ID have to identify a single element? For instance if a quote is spread out over several paragraphs, why can't we assign the same ID to each of the paragraphs or other elements used in the quote, and thus have an ID for the whole distributed quote?

Syd Bauman of Brown University's Women Writers Project kicks off day 3 with "TEI HORSEing around: Handling overlap using the Trojan Horse method." HORSE is the hierarchy obfuscating really spiffy encoding. He's proposing YAMFORX (Yet Another Method for Overlap Representation in XML.)

He's using RELAX NG to validate. He's using Schematron to validate the matching between the pseudo-start empty element-tags and the corresponding pseudo-end empty-element tag because RELAX NG can't do this. (This would make a compelling use case for extensible RELAX NG complex types validation. Someone remind me to send this to the relaxng mailing list.) He might like to extend this scheme to many elements.

It strikes me that the real problem with the TEI approach is that it's not naturally exposed in the tools and data models used by DOM, XPath, XQuery, XSLT, etc. He recognizes this too. The pseudo-start-tags and pseudo-end-tags don't become real elements when parsed. He can swap which elements overlap which. However, this may break validity.

The second session features Paul Caton talking about "LMNL Matters?" LMNL has not seen broad adoption or development.

He wants genuine third party markup like highlighting a book. That is, the author does not know about, permit, or participate in the markup. Hence Limner.

He references Foucault as saying users tend to think in hierarchies naturally, at least post-Linnaeus.

In Q&A Wendell Piez suggests that multiple overlapping hierarchies are a subset of the overlap problem.

After the coffee break, Erich Schubert discusses "Structure-preserving difference search for XML documents" (co-authors Sebastian Schaffert and François Bry). Think diff, merge, patch, CVS, Subversion, etc. but also about humans scanning diff files to identify changes. This is where diff really falls down. Plus line-based text diffing isn't really suitable for XML. Most XML differencing uses longest common subsequence where XML tokens such a stags are used as the boundaries rather than line breaks. This algorithm focuses on producing the minimal file size, but honeslty who cares about this in 2005? It was important for distributing software over Usenet in 1985, but it's just not a problem today. It's more important to focus on human factors and human comprehensibility of the diff files. He shows Logilab's XPath based xmldiff format; and he's right. It's ugly and incomprehensible.

Xcerpt query by example and Simulation Unification algorithm inspired him. He's trying to maximize structure preservation in the diffs. They use Dijkstra's algorithm with optimized cost functions Implementation is open source in C++ on top of libxml. The prototype is limited to XML, but the algorithms generalize to any graphs. The output format is XUpdate

We need a lightning talk session at this conference.

Steven DeRose gives the talk I'm most looking forward to hearing this morning, "Architecture and speed of common XML operations." Optimization's always fun, if usually unnecessary; and this might give me some good ideas for speeding up XOM. (The next beta should be roughly twice as fast as the previous release, by the way. Wolfgang Hoschek found a number of inefficiencies in parsing and serialization I've now corrected.)

He starts off with a recap of basic algorithm analysis. Steve, we all know all this already. Tell us about XML! (There are ~60! particles in the universe. I didn't know that, and I sort of wanted to. Does that include photons and neutrinos or just protons, neutrons, and electrons?)

OK, he's finally started on XML 20 minutes into a 45 minute talk. Or really SGML. A naive implementation of the & operator can have exponential behavior (but better algorithms are possible.) So far this is still old news and a solved problem though.

Onto XPath: what's the speed of different query operations? Same question for DOM. These are non-trivial. XPath tends to be at least O(n) where n is the number of items in the list you're operating on. DOM does not offer preceding or following axis like XPath does. This means XPath on top of DOM has a bottleneck on the preceding and following axes. (Perhaps for this reason nobody actually does this.) If you have a list of all the nodes in the document (which DOM normally doesn't), then preceding and following are fast.

Parameters (the N in O(N) ) can be the number of nodes in the document, the depth of the tree, or the number of children. In his experiments non-CALS documents tended to be about 8 deep. CALS documents were about 13 deep. Number of children runs about 1-100. Dictionaries that out all the definitions at one level may go up to 25,000 child elements or even 125,000 for an English danish dicitonary one audience member worked on; but normally number of children is 100 or less.

Storage models:

Indexing helps for raw XML, but it imposes size limits on the documents you can handle.

Big problem with relational databases for XML is limited axis support. Plus they explode space usage. First child + next sibling is a common compromise. However it's one way. Parent and first-child also works OK. It's O(N) to get the preceding siblings. All preceding siblings is O(N2). All preceding siblings of all nodes is O(N3) on this implementation. Or you can work around it in your code. Instead gather a list of all the siblings in forward order and then run through it backwards. In other words, flipping the order of iteration through the loop may speed code up by three orders of magnitude or more. Collectin alll the ancestors is also expensive on top of a relational database. (fixed point join) Dongwook Shin has a method to pack an XML structure into a relational database. This is pretty clever stuff that I can't type fast enough to summarize. Read the paper. However the insertions are expensive but you can use real numbers instead of integers to speed up insertions. And he's out of time.

Sometimes the most interesting things happen at lunch. David Dubin just clued me in to the non-existence of the phantom paper, an influential paper that is regularly cited in its field that was never written and never published. I've heard of urban myths and famous quotes that were never actually said; but this is the first time I've encountered a mythical paper. This paper is usually cited as having been written 30 years ago, so why are we just now discovering that it doesn't exist? Doesn't anyone check their references any more?

Mirco Hilbert and Andreas Witt (co-author Oliver Schoenfeld) are discussing "Making CONCUR Work". As Deborah LaPeyre said in her introduction, CONCUR is the feature in SGML nobody ever implemented.

The issue is non-hierarchies: multiple roots. Non-hierarchical markup (e.g. overlap) can often be implemented as multiple trees. CONCUR allowed on document to reference two different DTDs for two different, potentially overlapping hierarchies. They call their solution MuLaX (Multi-Layered XML). It's modeled on CONCUR but is not CONCUR. It looks like this:

<?mlx version="1.0" encoding="iso-8859-1"?>

<!DOCTYPE (1)div SYSTEM "tei/dtd/teispok2.dtd">

<!DOCTYPE (2)text SYSTEM "tei/dtd/teiana2.dtd">

<(1)div type="dialog" org="uniform">

<(2)text>

<(1)u who="Peter">

<(2)s>Hey Paul!</(2)s>

<(2)s>Would you give me

</(1)u>

<(1)u who="Paul">

the hammer?</(2)s>

</(1)u>

</(2)text>

</(1)div>They can project this onto an annotation layer that I think is real XML. The editor tool is written in C++ with wxWidgets.

The next session is "A New Paradigm of Parsing XML based on Free Cursor Mobility" (FCM) by Antonio J. Sierra. This is event based based parsing (like SAX or StAX?). The difference is you can move the cursor back as well as forward. It's designed for small platforms (e.g. cell phones and PDAs). Could you implement XPath on top of this? Maybe even if not perfectly efficiently? Might be important for small devices with large documents.

The API terminology is a little idiosyncratic, broher and father instead of sibling and parent.

The idea is pretty obvious: basically it's an iterator like in a pull parser with extra methods to go backwards as well as forwards. The key is in the implementation. How will this work on streaming data over the network? It measures faster than Xparser-J (DOM) but slower than kxml2. kxml2 is the only parser in his comparison list I've heard of, and it's not state of the art.

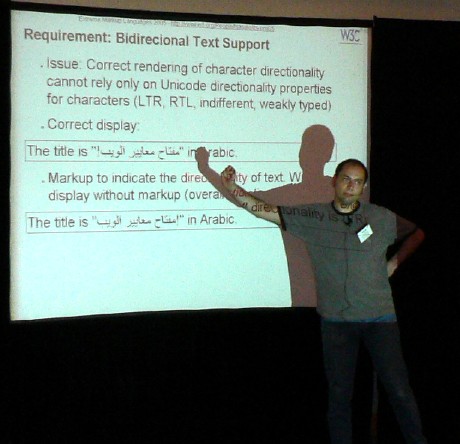

Felix Sasaki (co-authors Christian Lieske and Andreas Witt) is talking about the W3C's Internationalization and Localization Markup Requirements (which is scheduled to be published tomorrow but he gave away the URL today). More specifically he's talking about "Schema Languages & Internationalization Issues: A survey."

The question is howw to work intrantionaliation and localization based markup such as bidirectional markers and Ruby into schemas. Versioning is a problem. XHTML is the poster child here. DTD modularization doesn't survive validation. W3C Schemas have problems. Architectural forms may help as may RDF. Eric van der Vlist in Q&A suggests using processing instructions instead.

In the final regular session of the day Liam Quin is catching flak for the W3C about anything and everything people want to bitch about. He hopes XSLT 2 will go to candidate recommendation in a couple of months.

Tommie Usdin is very disappointed in the "nearly all" compatibility of XSLT 2 with XSL 1.1. She wants full compatibility. "It erodes confidence. It's an enormously big public relations mistake."

Syd Bauman wants the W3C to handle characters outside of Unicode (a very bad idea IMHO). He wants SDATA entities.

Simon St. Laurent wants to start simplifying standards again (as XML sdimplified SGML, XSL simplified DSSSL, and XLink simpliied HyTime) and stop building huge specs by committee like Schemas, XQuery, and XSLT 2. Ann Wrightson agrees, and adds that she wants the W3C to knock vendor heads together to encourage more schema compatibility.

John Cowan wants the XML Core Working Group shut down to prevent people from tampering with the core of XML.

Boeing found that most of their suppliers didn't even know what XML was, and they had to train them.